The power grid is evolving from human command to machine learning—where AI, guided by engineers, enables adaptive, self-learning systems that boost reliability, speed, and resilience in an AI-driven energy era.

For more than a century, electric power systems have operated under a single organizing principle: control. Utilities designed the grid to obey human instruction, not to think. Engineers defined operational parameters—voltage, frequency, load margins—and the machinery complied. The logic was simple, deterministic, and profoundly effective. Electricity flowed because every part of the system, from the turbine to the transformer, knew its place in a chain of command.

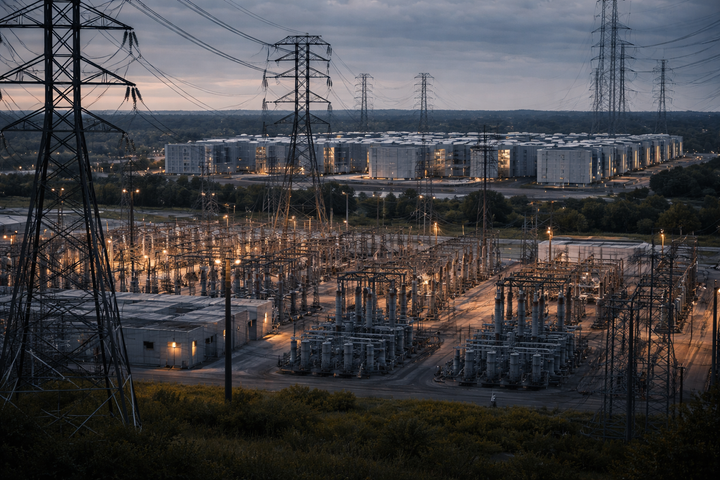

But the world that built that grid no longer exists. What was once a predictable architecture of load and supply has become a living system of volatility. Solar and wind power introduce intermittent rhythms, electric vehicles generate stochastic demand, and data centers—driven by the exponential hunger of artificial intelligence—have begun to redraw the geography of electricity itself. In Virginia, for example, hyperscale campuses now consume more power than entire cities, prompting Dominion Energy to warn of capacity shortfalls within the decade.¹ Across the country, utilities face similar dilemmas: how to serve loads that move faster than their planning cycles can adapt.

The traditional model—human command, machine compliance—is reaching its cognitive limit. The grid must now evolve from something we manage to something that learns.

The Limits of Control

Command-and-control logic worked when the grid was linear. Engineers could model the system as a series of known relationships: if X happens, apply Y. Frequency deviates? Adjust governor setpoints. Load spikes? Dispatch spinning reserve. But these rules presume stability—that weather is seasonal, demand is cyclical, and failure is rare. None of those assumptions hold true anymore.

Static line ratings, for instance, still dominate transmission planning even though they assume “average” weather conditions that scarcely exist. FERC Order 881, issued in 2021, recognized this mismatch and required utilities to adopt Ambient Adjusted Ratings—capacity values that flex with real-time temperature and wind conditions.² Yet implementation lags. Decision latency remains high; switching sequences depend on operator judgment and the finite bandwidth of human attention. Control rooms are flooded with alarms—tens of thousands per day in large balancing authorities—creating a signal-to-noise problem that erodes situational awareness.³

Meanwhile, distributed energy resources (DERs) are proliferating faster than operators can model them. Rooftop solar, behind-the-meter batteries, and electric vehicle fleets are no longer passive loads—they are dynamic, interactive nodes at the edge of the grid. Each introduces new behaviors that break old assumptions. Traditional forecasting tools, designed for aggregate loads, struggle to capture these nonlinearities. A single AI data center can draw 300 megawatts continuously, its load shape governed not by human demand but by machine inference cycles.⁴

The result is structural stress: longer interconnection queues, rising congestion costs, and a creeping erosion of reliability margins. We are entering a period where the grid’s complexity exceeds our ability to script it.

Toward a Learning System

A self-learning grid is not the same as an autonomous one. It is not about handing over control to algorithms; it is about constructing adaptive intelligence around human judgment. The shift resembles what aviation experienced when autopilot systems began to augment, rather than replace, pilots. In power systems, this means embedding continuous learning loops into the fabric of operations.

The data already exists. Supervisory Control and Data Acquisition (SCADA) systems capture operating states in millisecond intervals. Phasor Measurement Units (PMUs) record oscillation signatures that reveal stability margins. Advanced Metering Infrastructure (AMI) traces household demand and EV charging behavior. When cross-referenced with weather and geospatial data, these signals form a sensory network capable of perception.⁵ The next step is cognition: algorithms that can generalize from patterns, anticipate rather than react, and tune thresholds dynamically.

Imagine a transmission corridor that “learns” its own safe operating limits. When ambient temperature drops or wind increases conductor cooling, the system automatically raises its line rating within verified bounds. Or consider a substation that recognizes early indicators of transformer insulation degradation—detected as subtle harmonics in voltage waveforms—and schedules maintenance before a fault occurs. These are not speculative futures; they are pilots already under way at utilities from National Grid in the United Kingdom to Oncor in Texas.⁶

The Architecture of Learning

To build a cognitive grid, we must combine disciplines that have long operated in silos: power engineering, data science, and systems psychology. Machine learning provides the statistical scaffolding, but human expertise defines the boundaries. Engineers still set protection zones, safety margins, and acceptable risk levels. AI’s role is to explore the vast parameter space in between—millions of combinations too complex for deterministic modeling.

Dynamic Line Ratings are the first manifestation of this philosophy: capacity becomes a moving variable, tuned to real-world conditions rather than fixed assumptions. Predictive maintenance follows naturally, as sensors feed degradation models that evolve with each operational cycle. Learning dispatch agents—trained via reinforcement learning—can balance voltage and power factor faster than rule-based optimization, discovering new control strategies through simulation. And adaptive protection systems, already in development by researchers at Pacific Northwest National Laboratory, adjust relay settings in response to network reconfiguration while maintaining NERC compliance.⁷

The pattern is clear. Every operational domain that once depended on static logic can be recast as a learning problem.

Human-Centered Governance

The transition from control to cognition is not a surrender to automation—it is an invitation to redesign the partnership between humans and machines. In a cognitive grid, operators do not vanish; they ascend. Instead of manually approving every action, they supervise learning outcomes. Protection engineers validate adaptive settings rather than recalculating them. System planners become curators of models that evolve continuously instead of authors of studies frozen in time.

This human-in-the-loop architecture is essential for both ethical and practical reasons. AI can optimize variables, but it cannot articulate values. Deciding how much reliability is “enough,” or what level of risk is acceptable for a community, remains a human prerogative. As philosopher Hubert Dreyfus warned decades ago, intelligence without embodiment lacks context.⁸ The grid, as a socio-technical system, must retain its human conscience even as it acquires synthetic cognition.

The governance challenge, then, is to codify transparency, explainability, and accountability. Every adaptive decision must be traceable—why it was made, under what data conditions, and with what confidence. The energy sector can learn from aviation’s “flight recorder” principle: every algorithmic action leaves an audit trail. Without such frameworks, learning systems risk drifting into ungoverned autonomy, where optimization supersedes judgment.

Why Timing Matters

The urgency of this transformation cannot be overstated. The AI economy is rewriting electricity demand faster than any prior technological wave. Between 2020 and 2025, U.S. household electricity prices rose roughly twenty-four percent,⁹ partly reflecting infrastructure strain from data-center expansion. Developers now approach utilities not with price queries but with megawatt deadlines: Can we get 400 MW by 2026? The answer increasingly depends on whether the grid itself can learn.

Building new transmission is necessary but insufficient. Permitting timelines stretch across a decade; interregional lines remain politically fraught. Intelligence, however, can be deployed faster than steel. By applying learning models to existing assets—raising throughput through topology optimization, dynamic ratings, and predictive coordination—utilities can unlock latent capacity equivalent to years of capital buildout. DOE’s 2023 Grid Deployment Office estimated that advanced grid management could defer $50 billion in infrastructure costs by 2035.¹⁰

The stakes extend beyond economics. As climate volatility intensifies, resilience will hinge on adaptability. A self-learning grid can self-correct under stress, rerouting power during wildfires or hurricanes in ways no static script could anticipate. In this sense, cognition becomes a resilience strategy—a form of collective intelligence distributed across conductors, substations, and sensors.

The Cognitive Frontier

If today’s power grid resembles the nervous system of industrial civilization, tomorrow’s will resemble its brain. Synapses form where data streams converge: PMUs, inverters, and weather nodes exchanging information at sub-second speeds. Feedback loops strengthen or weaken depending on performance, much like neural plasticity. Over time, the system develops memory—an archive of operational experience that informs future decisions.

The analogy is more than poetic. Researchers at the Electric Power Research Institute are experimenting with reinforcement learning agents that “remember” past grid states to improve response during contingencies.¹¹ European operators are testing digital twins that simulate millions of potential configurations, learning optimal dispatch sequences before deploying them in the field. Each iteration moves the grid closer to cognition.

Yet this evolution also raises philosophical questions. What does it mean for infrastructure to learn? Can a system designed for obedience acquire agency without destabilizing trust? The answer lies in design intent. A learning grid is not an independent intelligence; it is a collaborative one. Its purpose is not to decide but to assist—to extend human foresight across scales and timeframes we cannot otherwise perceive.

Policy as Architecture

Policy must evolve alongside technology. FERC 881 marked a turning point by embedding learning logic—dynamic adaptation—into regulation. Future rulemakings could go further, mandating data transparency, standardized model validation, and AI governance frameworks akin to medical device certification. At the state level, regulators can incentivize learning-based reliability metrics rather than purely capital expenditure.

Internationally, the European Union’s AI Act offers a preview of sector-specific governance: classifying grid control systems as “high-risk” AI applications subject to audit.¹² The United States lacks such coherence, but momentum is building. DOE’s recent “AI for Energy” initiative emphasizes explainability and safety in critical infrastructure applications, signaling an awareness that intelligence must be accompanied by accountability.¹³

The transition will also reshape workforce development. Future operators will need fluency not only in power flow equations but in data ethics, probabilistic modeling, and human-machine interface design. Training the grid, in this sense, means training ourselves—to think symbiotically with systems that think back.

Every technological shift carries an ethical dimension. The command-and-control era reflected the industrial mindset of its time: mastery over nature through calculation and authority. The cognitive era, by contrast, invites humility. It asks engineers to recognize that complexity can no longer be contained by control alone. Learning, not domination, becomes the organizing principle.

This is not a loss of rigor; it is an evolution of it. To build a grid that learns is to acknowledge that intelligence—human or artificial—is always bounded, always provisional. Resilience arises not from perfection but from adaptation. In this sense, the future of the grid parallels the future of humanity in the age of AI: survival through cognition.

Conclusion

The grid we inherited was built to obey. The grid we need must be able to learn.

If the twentieth century’s triumph was engineering stability into an unruly force of nature, the twenty-first must engineer adaptability into an unstable world. The command-and-control model brought us electrification; the learn-and-adapt model will bring us decarbonization, digitalization, and resilience.

We cannot program the future by adding more rules to an increasingly dynamic world. We must train it—patiently, transparently, and with moral intent. The future grid will not be managed. It will be taught.

Notes

- Dominion Energy, Integrated Resource Plan 2023, Virginia State Corporation Commission filing, May 2023.

- Federal Energy Regulatory Commission, Order No. 881: Managing Transmission Line Ratings, December 2021.

- North American Electric Reliability Corporation (NERC), Situational Awareness Technical Reference Document, 2022.

- U.S. Department of Energy, Data Center Energy Consumption Trends, 2024.

- Electric Power Research Institute (EPRI), Grid Learning Systems: Foundations and Pathways, 2023.

- National Grid UK, Dynamic Line Rating Pilot Report, 2022; Oncor Electric Delivery, AI-Enhanced Asset Management, 2023.

- Pacific Northwest National Laboratory (PNNL), Adaptive Protection and Learning Relays, 2024.

- Hubert L. Dreyfus, What Computers Can’t Do: The Limits of Artificial Intelligence (New York: Harper & Row, 1972).

- U.S. Energy Information Administration, Electric Power Monthly, August 2025.

- U.S. Department of Energy, Grid Modernization Benefits Report, 2023.

- EPRI, Reinforcement Learning for Grid Optimization, 2024.

- European Commission, Artificial Intelligence Act, 2024.

- U.S. Department of Energy, AI for Energy: Responsible Intelligence Framework, 2025.