Grid Innovations Without Guardrails: Why Utility AI Demands Governance

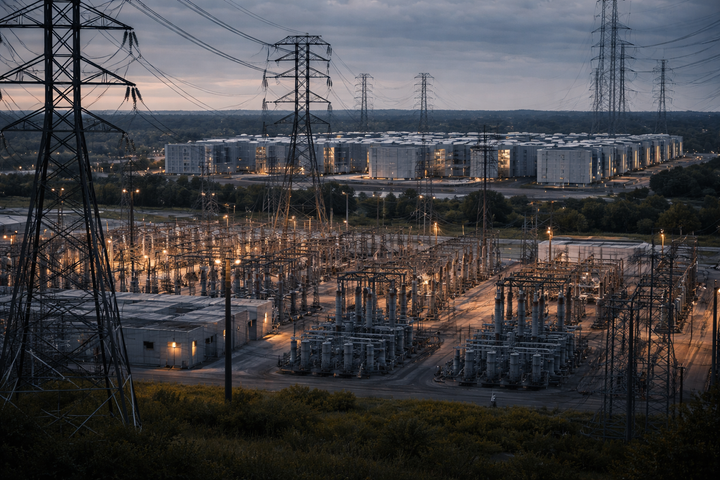

AI is transforming grid operations—forecasting renewables, managing EVs, and detecting wildfires—but without governance, risks of bias, cyberattacks, and black-box failures grow. The missing link is systemic oversight to ensure transparency, safety, and accountability.