How AI Workload Flexibility Is Shaping the Grid’s Future

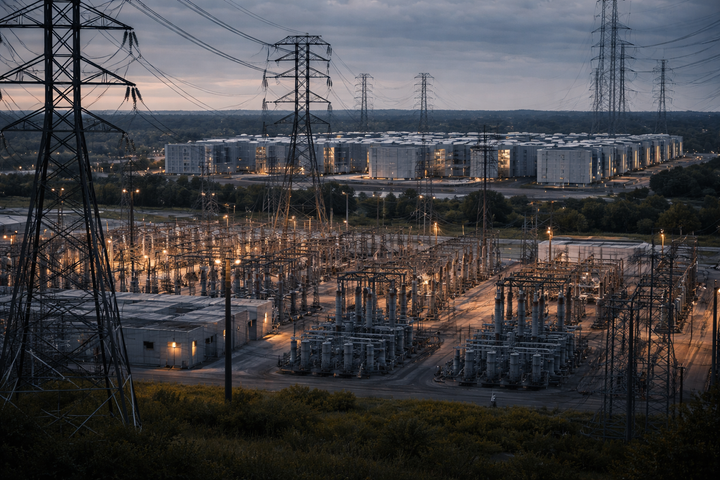

AI-driven data centers are reshaping the grid, turning from static loads into flexible assets that support reliability and decarbonization. With Google leading the way, this shift demands new policies, pricing models, and equity safeguards to align digital growth with grid resilience.