AI is quietly shifting grid operations by moving judgment upstream into decision-support software. The Cognitive Grid argues legitimacy requires permission that is legible, bounded, and auditable at machine speed—implemented via EthosGrid’s runtime governance layer.

In utility control rooms in the mid-2020s, a quiet shift has been underway: artificial intelligence systems, introduced as forecasting and decision-support tools, are increasingly shaping the order and character of operational choices long before any switch is thrown or any formal accountability process begins. The emerging pattern is not new machinery entering the grid—automation has governed frequency, voltage, and dispatch for generations—but a relocation of judgment, meaning the structured prioritization of outcomes under constraint, into upstream software that ranks risks, recommends restoration sequences, and narrows the set of options presented under pressure.

As I reflect in my recent book, The Cognitive Grid, Electric power systems are not governed by electricity alone. They are governed by permission—by law, by regulation, by institutional settlements that determine who may act, under what conditions, and with what accountability when consequences are public and unavoidable. Reliability is a technical achievement. Legitimacy is an institutional one.

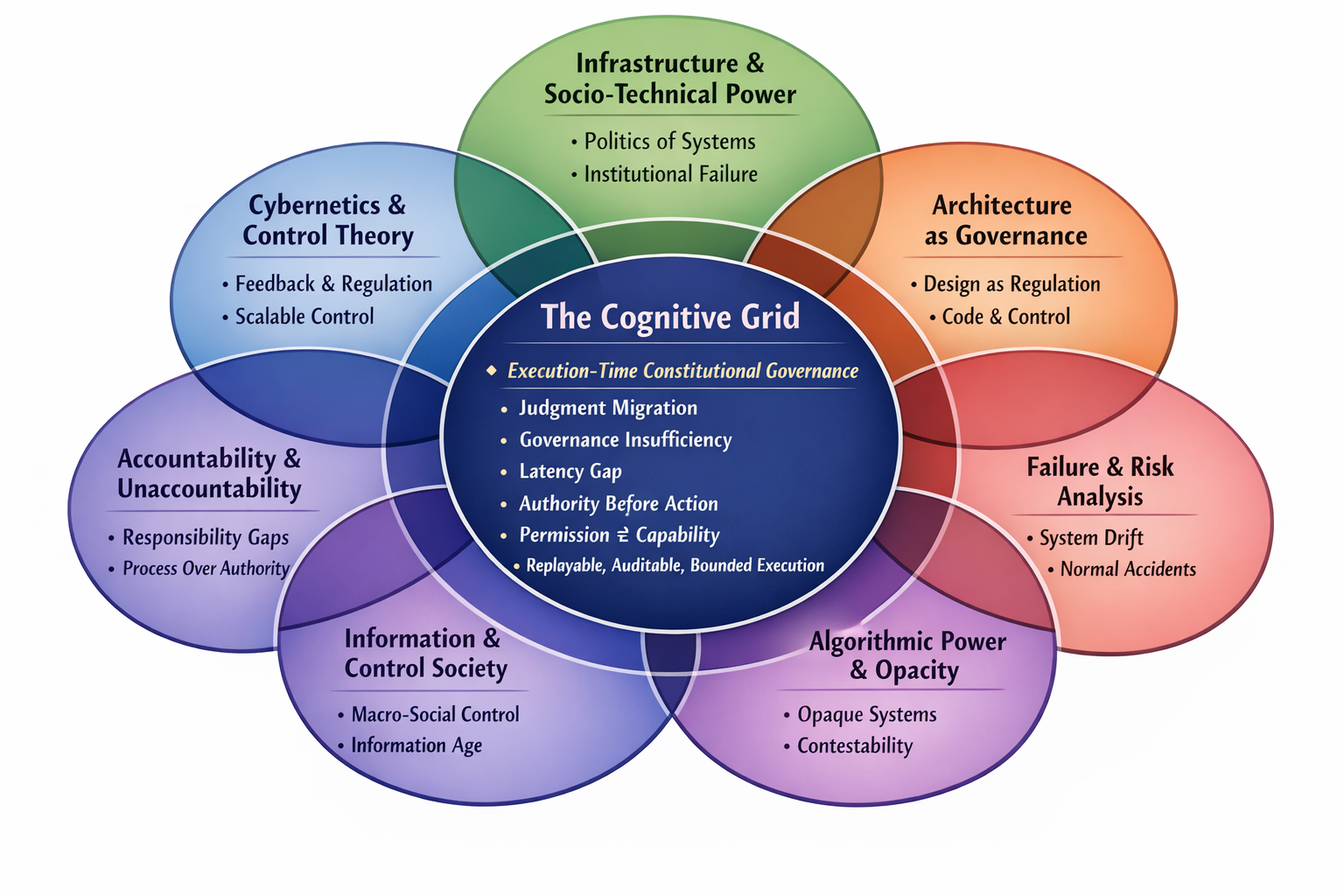

The book itself is a modest contribution to an established nonfiction canon on governing complex, tightly coupled systems. It borrows from cybernetics and control theory, the reliability-and-failure literature, and the accountability tradition that shows how outcomes are produced while responsibility diffuses. Its narrower claim is simply this: as machine-mediated judgment accelerates, permission must become executable—legible, bounded, and auditable—at the same execution-time scale.

The book argues that what is changing today is not that machines are entering the grid. Machines have shaped grid operations for more than a century. What is changing—quietly, and with far greater consequence—is that judgment itself is beginning to relocate. The structured prioritization of outcomes under constraint is moving upstream, into software systems that prepare decisions long before any operator throws a switch or any regulator asks for an explanation.

This argument is neither speculative nor alarmist. Artificial intelligence is entering critical infrastructure not as formal authority, but as advice. And advice, when relied upon under pressure, hardens into default. Defaults, once normalized, become authority. By the time institutions recognize that authority has moved, it is often too late to recover it without failure.

Delegated Judgment Has a History—and a Boundary

The electric grid has always relied on delegated judgment. From James Watt’s flyball governor to automatic generation control and supervisory control systems, feedback mechanisms have long sensed deviation and corrected it faster than human intervention could manage. Over time, those mechanisms scaled from local devices into regional and national control architectures.

What made those transitions governable was not restraint in engineering ambition, but clarity in institutional design. Operators supervised automated processes. Control logic executed within approved bounds. When failures occurred, utilities and regulators could reconstruct decisions with precision: what information was available, what constraints were in force, what actions were authorized, and where responsibility lay.

That legibility mattered. It allowed oversight to function not only after the fact, but prospectively—through standards, operating requirements, and enforceable limits. Delegation existed, but it was bounded. Authority did not migrate invisibly.

AI Changes Where Judgment Is Exercised

Contemporary AI systems now entering grid operations are, by design, limited in scope. They forecast outage probability, rank assets by risk, cluster customer calls, estimate restoration timelines, and recommend crew staging or sequencing. They are typically described as “decision support,” not control.

From a governance perspective, that distinction obscures more than it clarifies. A feeder criticality ranking is not neutral analytics. It encodes a prioritization framework—a theory of consequence expressed in code—about whose service matters most when not all can be restored at once. A restoration optimizer does not merely improve efficiency; it allocates delay. A model that collapses uncertainty into a single recommended plan narrows the space of contestable options precisely when uncertainty is highest.

In practice, authority is exercised not only at the moment of execution, but at the moment the decision space is shaped. By the time a breaker is closed or a dispatch instruction issued, the outcome may already be largely determined by upstream computational judgment.

The Latency Gap

Institutions govern on deliberative timescales. Software operates continuously. This mismatch creates a growing latency gap between machine-mediated judgment and institutional oversight. Decisions may be effectively settled before reporting requirements, after-action reviews, or compliance mechanisms can engage. When outcomes are contested, institutions are left reconstructing decisions that were never explicitly authorized—only normalized through repeated reliance.

This is not a failure of intent. It is a structural mismatch. Responsibility remains formally human, but influence has already migrated into technical systems that are difficult to inspect, challenge, or unwind under stress.

In public-consequence systems, performance alone cannot confer legitimacy. Accuracy does not substitute for permission. Efficiency does not replace accountability. The public will tolerate hardship. It will not tolerate unaccountable hardship.

A Constitutional Architecture

The governance problem posed by AI in the grid is not solved by policy statements, ethics frameworks, or assurances of human oversight. It is solved—or not solved—by architecture.

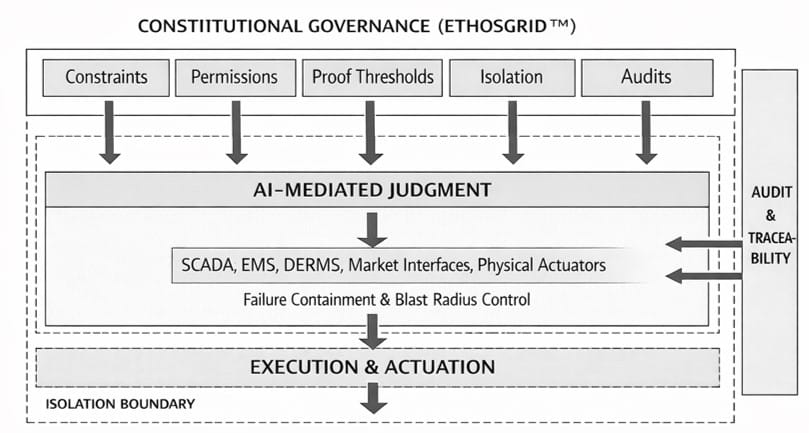

EthosGrid is a constitutional governance architecture designed to intervene where authority actually begins to move: the preparation of judgment inside software-mediated systems. Rather than treating governance as an external review function, EthosGrid embeds governance directly into the operational stack—upstream of execution and downstream of formal authority.

Its core premise is simple and institutional in nature: capability and permission must be separated. Modern AI systems can generate recommendations, rankings, and optimization outputs at machine speed. Left unchecked, that capability tends to collapse into de facto authority through habit. EthosGrid prevents that collapse by inserting a governed judgment layer between AI systems and execution.

Governing Judgment, Not Just Action

Traditional oversight focuses on executed actions: switching events, dispatch outcomes, market results. EthosGrid shifts attention upstream, to the moment when decisions are prepared—when options are ranked, uncertainty is compressed, and certain paths are rendered reasonable while others disappear.

EthosGrid does not replace SCADA, EMS, DERMS, market platforms, or operator authority. It governs how AI-mediated judgment may inform those systems. It defines what kinds of recommendations may be generated, under what conditions they may be relied upon, and what evidence must be produced as a condition of use.

Authority, in this framing, resides wherever consequence is allocated under constraint. EthosGrid treats that allocation as a governed act.

The Constitutional Artifacts

EthosGrid operationalizes governance through five enforceable artifact classes, aligned with regulatory practice while operating at machine speed:

- Constraints encode non-negotiable limits on objectives, risk exposure, and protected classes of service.

- Permissions specify which recommendations may proceed under defined conditions and when escalation is required.

- Proof Thresholds require explicit treatment of uncertainty and evidentiary sufficiency before reliance is permitted.

- Isolation enforces blast-radius control, containing failures before they propagate into execution.

- Audits ensure traceability and reconstruction, enabling investigation, enforcement, and public accountability.

Together, these artifacts form a constitutional spine: governance as a condition of operation, not a post-event narrative.

The Judgment-Layer Governor

EthosCore is the runtime embodiment of this architecture. It operates at the judgment layer of the utility software stack—above execution and actuation, but below institutional authority. EthosCore evaluates AI-generated recommendations against constitutional artifacts in real time, determining whether they may proceed, must be modified, require escalation, or must be rejected.

From a regulatory perspective, EthosCore functions as a control surface for delegated judgment. It does not decide on behalf of the institution. It enforces the conditions under which decisions may be prepared and relied upon, restoring legibility without sacrificing speed.

Why Architecture Matters Now

AI systems are already embedded in advisory workflows. Defaults are forming. But most have not yet crossed into direct execution. Integration choices remain reversible. This is the window in which governance can arrive as architecture rather than remediation.

The electric grid is uniquely suited to this reckoning. It is already regulated, already audited, and already required to explain itself after failure. Harm is immediate. Consequences are visible. Authority must be reconstructible.

If AI-mediated judgment cannot be bounded and made legible here—where governance maturity is highest—it will not be bounded elsewhere. Conversely, success in this domain establishes a model for governing intelligence across all critical infrastructure.

The Question That Remains

The question confronting institutions is no longer whether AI will influence operations. It already does.

The question is whether authority exercised through AI-mediated systems will remain visible, bounded, and answerable—or whether it will emerge by default, normalized through practice rather than authorized through governance.

Advice will arrive first. The only remaining choice is whether authority follows by accident, or by design.

Beyond the Grid

The electric grid is not unique in confronting this moment. It is simply early. Across modern society, the same pattern is repeating wherever complex systems operate under public consequence. In transportation networks, algorithmic systems increasingly determine routing priorities, congestion responses, and emergency access. In healthcare, clinical decision-support tools shape triage, diagnosis, and resource allocation long before physicians act. In financial markets, automated risk models and trading systems set the tempo of capital flows, liquidity, and systemic exposure faster than regulators can intervene. In public administration, algorithmic screening systems influence eligibility, enforcement, and allocation decisions that carry legal and human consequences.

In each case, artificial intelligence arrives first as assistance. It promises efficiency, consistency, and foresight. And in each case, the same institutional risk follows: judgment migrates upstream into systems optimized for speed, while governance remains downstream, attached to outcomes rather than embedded in decision formation.

The lesson of the grid is not about electricity. It is about delegated power. Infrastructure becomes cognitively mediated when machines do not merely execute instructions, but prepare decisions that shape what action becomes possible. When that preparation is left ungoverned, authority does not vanish—it relocates, quietly, into architectures that were never designed to carry it.

EthosGrid is offered not as a sector-specific solution, but as a generalizable constitutional pattern: governance embedded at the judgment layer; capability separated from permission; accountability preserved at machine speed. The particular technologies will differ. The institutional requirement will not.

Modern societies have learned, repeatedly, that power exercised without legibility eventually demands correction—often through crisis. The opportunity before us is to govern intelligent infrastructure before failure forces that reckoning. The grid shows that this is still possible. It may be the last system to offer that chance before cognitive infrastructure becomes the default condition of modern life.

Fore more information on The Cognitive Grid visit www.AIxEnergy.io/CognitiveGrid.

© 2026 Brandon N. Owens. All rights reserved. The Cognitive Grid and the EthosGrid architecture, including associated concepts, terminology, frameworks, and design elements, are proprietary and protected. No reproduction, distribution, or derivative use without prior written permission.