How Electricity Became the Hidden Frontline in the U.S.–China AI Rivalry

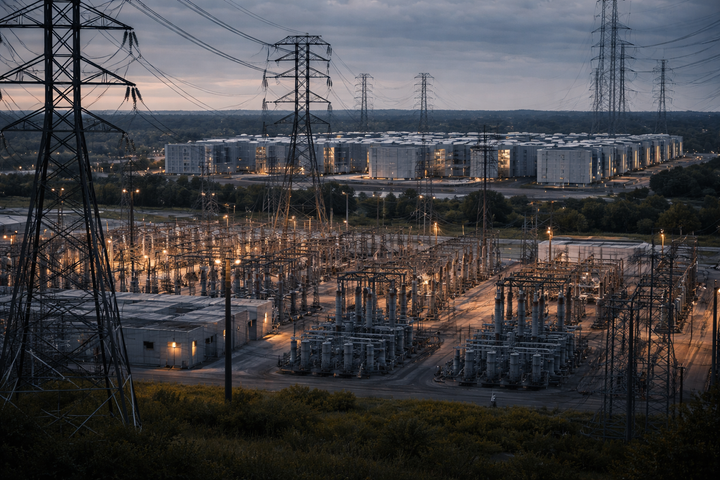

The AI conversation has long centered on algorithms, data, and hardware—but beneath every inference lies a more vital dimension: electricity. The key question isn’t just whose code is smartest—but whose power stays on.