The OpenAI–AMD Accord in Context: From Silicon Optionality to Grid Sovereignty

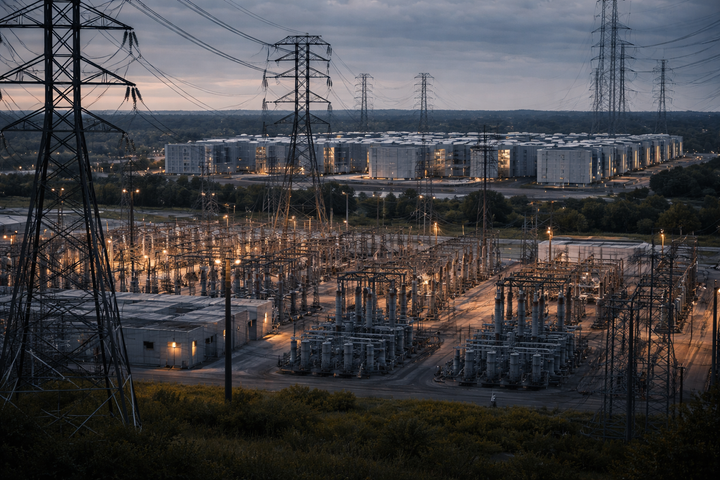

OpenAI’s 6-GW deal with AMD marks AI’s growing dependence on real power. It’s a milestone linking silicon to substations, forcing planners and policymakers to treat compute demand as part of the energy system, not apart from it.