The Utility-AI Playbook: A Practitioner’s View from the Grid Edge

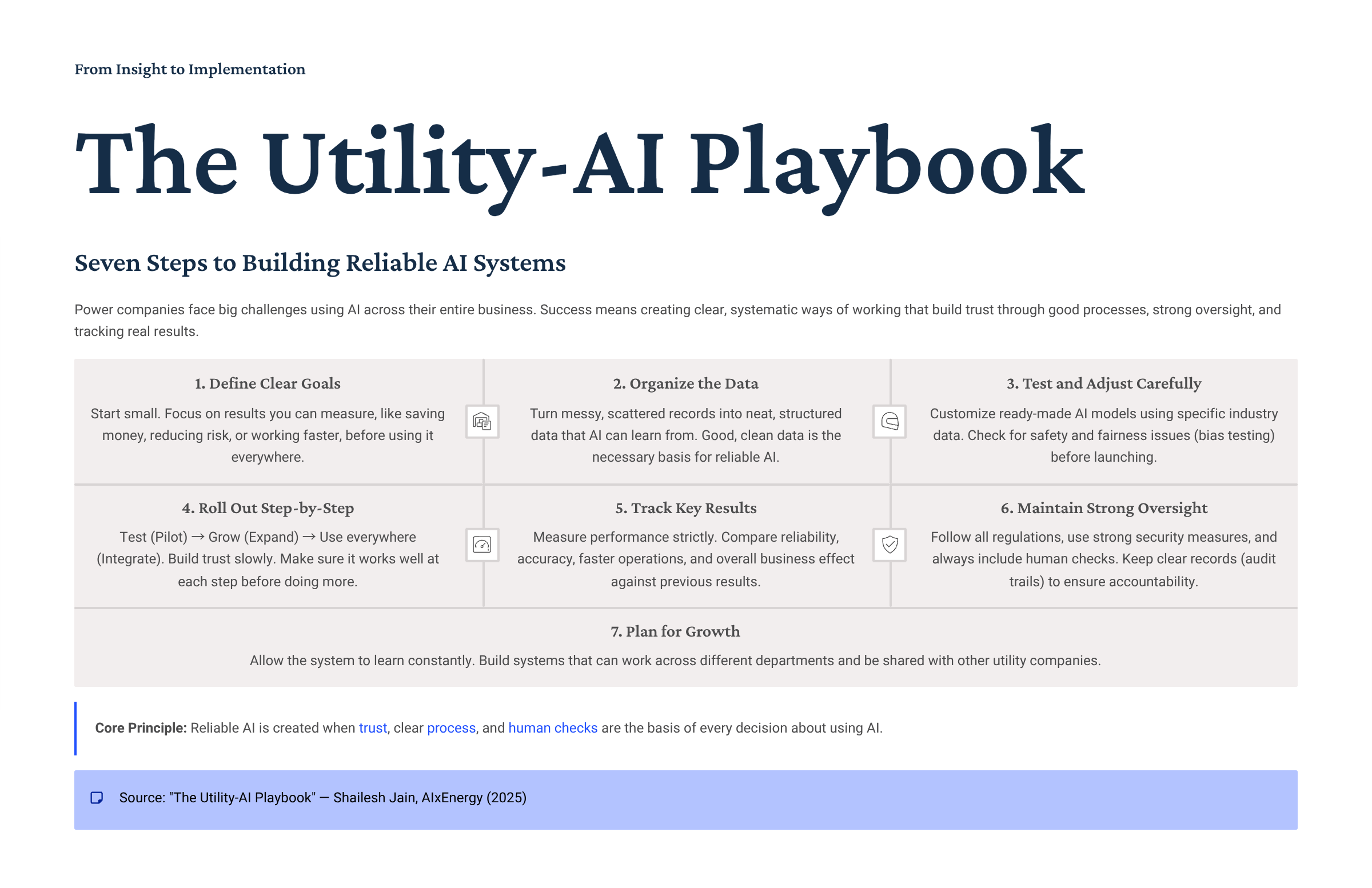

AI in utilities isn’t about replacing people—it’s about process, trust, and governance. The Utility-AI Playbook outlines seven steps to make intelligence utility-grade: start small, structure data, fine-tune, phase in, measure, govern, and evolve. Reliability remains human-led.

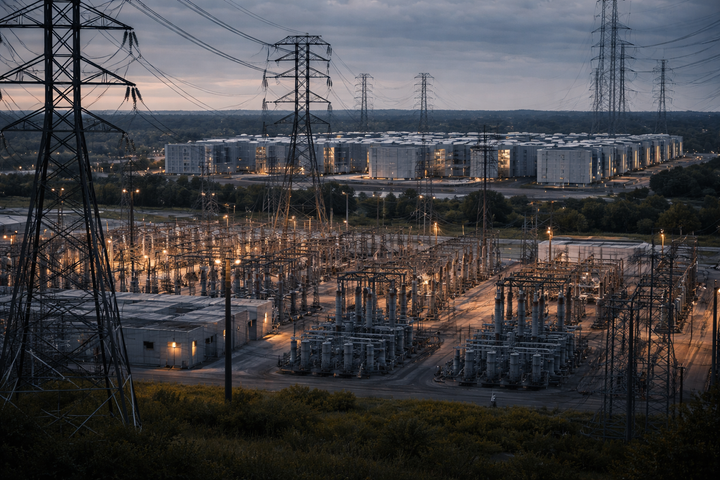

When SCADA first entered the substation, engineers met it with folded arms. Many of us who grew up around relays, analog meters, and switchyards learned to trust the physical world—the smell of ozone, the vibration of a transformer, the needle of an ammeter. Letting a remote signal tell us what was happening miles away required not just new technology, but a new mindset. We didn’t call it “digital transformation” back then—we just called it change, and it took pilots, patience, and a lot of coffee to make it stick.

That was half a century ago. Today, the grid stands on the brink of another transformation—one defined not by electrons alone, but by intelligence. Artificial intelligence is no longer confined to tech labs or data centers; it’s entering substations, control rooms, and utility planning departments. And much like SCADA or AMI before it, AI is meeting the same old skepticism: “Can we really trust it?”

The answer, as before, lies not in the technology itself but in process. Utilities have always advanced through structured, stepwise adoption—pilot, verify, scale. What separates a failed innovation from a lasting one is not the brilliance of the idea, but the rigor of its execution. If AI is to become truly utility-grade, it must be built on that same foundation: reliability, accountability, and governance.

Step 1: Start with the Use Case, Not the Hype

Every tool that lasts in a utility starts with a problem, not a pitch. SCADA didn’t begin as an experiment in automation—it was a visibility solution. AMI wasn’t about smart meters; it was about smarter billing and outage management. AI must follow that logic.

The first step is defining why you need AI. What decision does it accelerate? What risk does it mitigate? What cost does it reduce? If those answers aren’t clear, the technology becomes a hammer searching for nails. The best AI deployments I’ve seen begin with humble, well-bounded questions: “Can we improve DER hosting analysis accuracy?” “Can we automate switching sequence validation?” “Can we predict asset failure earlier?” These questions may sound small—but so did the first SCADA experiments that only monitored a single substation.

Start small, but design for scale. Every success story in utility modernization—from distribution automation to demand response—followed the same trajectory: prove it locally, then scale system-wide.

Step 2: Structure the Data Before You Train the Model

Utilities are rich in data but poor in structure. We have terabytes of load profiles, breaker operations, outage reports, and regulatory filings, but they live in silos—each with its own taxonomy, timestamping, and engineering shorthand. Before any AI model can be trained, this fragmented ecosystem needs order.

The process begins with data literacy: understanding what data exists, who owns it, and how it’s used. The next step is harmonization. Create common schemas that define terminology across departments—whether it’s voltage levels, equipment IDs, or naming conventions. Once the dictionary is consistent, AI can begin to learn meaningfully.

Think of structured data as the foundation of AI literacy. When we rolled out GIS integration twenty years ago, utilities spent years cleaning up asset registries before seeing value. The same principle applies here. A model trained on messy, inconsistent data will generate elegant nonsense.

AI doesn’t need more data—it needs better data. Contextual, structured, and relevant.

Step 3: Fine-Tune Responsibly

You don’t need to train a billion-parameter model from scratch to unlock value. Tools like Low-Rank Adaptation (LoRA) now allow utilities to fine-tune large models with manageable datasets. This is where the real engineering discipline comes in.

The fine-tuning process should unfold in three deliberate stages:

- Domain Adaptation: Train the base model on the utility’s internal corpus—technical manuals, line diagrams, maintenance logs, and operating procedures. This ensures the model “speaks utility.”

- Instruction Tuning: Introduce human reasoning through instruction–response pairs. For instance:

- Input: “Evaluate the voltage impact of adding 500 kW of solar at Feeder 14.”

- Output: “Voltage rise exceeds ANSI limits; recommend Volt-VAR inverter settings.”

This step transforms expert logic into machine-readable intelligence.

- Alignment and Validation: Incorporate safety and compliance guardrails. The model must know what not to do—just as a relay knows when not to close.

Fine-tuning responsibly mirrors commissioning equipment: design, test, align. We don’t energize a transformer without inspection; we shouldn’t deploy an AI model without validation.

Step 4: Roll Out in Phases

Utilities thrive on phased implementation. Whether it’s a new substation automation system or a tariff redesign, the formula is the same: pilot, evaluate, scale.

- Phase 1 – Pilot (3–4 months): Select a focused use case. Build a dataset of 1,000–2,000 examples. Test outputs with senior engineers in the loop. The goal is not perfection but trust.

- Phase 2 – Expansion (6–8 months): Add adjacent use cases—load forecasting, reliability modeling, voltage optimization. Integrate the model with existing tools (CYME, Synergi, OMS, CIS). Capture user feedback for retraining.

- Phase 3 – Enterprise Integration (12+ months): Deploy AI models across departments. Introduce continuous learning pipelines that refine performance over time. Create department-specific model variants tuned to planning, operations, or customer service.

Each phase builds credibility. The process should be transparent, auditable, and paced for institutional absorption. Remember, trust in utilities isn’t built overnight—it’s earned, one accurate prediction at a time.

Step 5: Measure What Matters

Utilities don’t measure success by novelty—they measure it by performance. For AI, that means rigorous evaluation frameworks.

- Technical Accuracy: Are voltage calculations correct? Are outputs consistent with IEEE and NERC standards?

- Process Efficiency: How much time does AI save in report generation or analysis cycles?

- Reliability Metrics: Are outage forecasts improving? Are maintenance interventions more targeted?

Benchmarks should be contextualized. Comparing AI to human performance isn’t about replacement—it’s about augmentation. If engineers spend less time wrangling data and more time solving problems, that’s success.

AI evaluation, like regulatory rate cases, should balance quantitative rigor with contextual judgment. A model may not always be right—but if it makes people more effective, it’s doing its job.

Step 6: Govern Relentlessly

In the power sector, governance is the difference between reliability and risk. We have checklists for everything—switching procedures, relay settings, safety tags. AI needs the same.

Governance should operate on three levels:

- Regulatory Compliance: Ensure models respect regional standards and reporting requirements. Train them on actual rulebooks—NERC CIP, IEEE standards, state regulations.

- Data Security: Treat models as critical infrastructure assets. Store them on-premises where feasible. Protect inputs and outputs as you would SCADA data.

- Engineering Oversight: Keep humans in the loop. No model should autonomously issue control actions without operator verification. Document every AI-assisted decision for traceability.

AI without governance isn’t innovation—it’s exposure. A well-governed model doesn’t just make good recommendations; it makes defensible ones.

Step 7: Think Long Term

Utility innovation has always been evolutionary, not revolutionary. SCADA didn’t change the grid overnight—it matured through thousands of incremental upgrades. AI will be the same. The goal isn’t to build a flashy prototype; it’s to establish a durable capability.

Long-term planning means designing systems for extensibility. Models must adapt to new data sources, standards, and use cases. Feedback loops should turn every correction into training material. Cross-utility collaboration—anonymized and aggregated—will accelerate industry-wide learning. Imagine models that improve as neighboring grids learn, creating a network of collective intelligence.

This vision isn’t far off. It’s the natural progression of an industry that already understands feedback, control, and resilience.

The Human Factor

After three decades in T&D, I’ve learned that every great system, no matter how advanced, depends on the people who operate it. AI is no different. It will not replace human expertise—it will amplify it. The operator remains the ultimate authority. Every time a human adjusts a model’s recommendation based on experience, the system grows smarter.

That is the partnership we are building: a feedback loop between cognition and experience, between machine inference and human intuition. The self-learning grid isn’t about autonomy; it’s about collaboration.

Reliability has never been just an algorithm—it’s a culture. And in that culture, trust is earned slowly, but once earned, it endures. AI must live by those same rules.

Let the grids learn for themselves—but never without us.

Notes

- IEEE Standard 1547-2018, Interconnection and Interoperability of Distributed Energy Resources with Associated Electric Power Systems Interfaces (IEEE, 2018).

https://standards.ieee.org/standard/1547-2018.html - Hu, Edward J., et al. “LoRA: Low-Rank Adaptation of Large Language Models.” arXiv preprint (2021).

https://arxiv.org/abs/2106.09685 - Edison Foundation / Institute for Electric Innovation, Electric Company Smart Meter Deployments—Foundation for a Smart Energy Grid (Edison Foundation, 2013).

https://www.edisonfoundation.net/-/media/Files/IEI/publications/Final-Electric-Company-Smart-Meter-Deployments--Foundation-for-A-Smart-Energy-Grid.pdf - U.S. Department of Energy, Advanced Metering Infrastructure and Customer Systems: Summary Report (DOE, 2016).

https://www.energy.gov/sites/prod/files/2016/12/f34/AMI%20Summary%20Report_09-26-16.pdf - U.S. Department of Energy, “Grid Modernization Initiative,” Energy.gov (DOE).

https://www.energy.gov/gmi/grid-modernization-initiative - Federal Energy Regulatory Commission, Commissioner-Led Reliability Technical Conference (FERC, 2024).

https://www.ferc.gov/media/2024-commissioner-led-reliability-technical-conference - North American Electric Reliability Corporation (NERC), Reliability Standards – Standards & Compliance (NERC).

https://www.nerc.com/pa/Stand/Pages/Default.aspx - National Association of Regulatory Utility Commissioners (NARUC), AI & Utility Regulation Resources (NARUC).

https://pubs.naruc.org/pub/165824D5-A04C-0A98-6CC4-2F91B57ADA7E - Electric Power Research Institute (EPRI), Monitoring and Advanced Data Analytics Program (EPRI).

(EPRI member resource; publicly viewable via EPRI program descriptions.)